| Authors: | A. Panousopoulou, S. Farrens, K. Fotiadou, A. Woiselle, G. Tsagkatakis, J-L. Starck, P. Tsakalides |

| Journal: | arXiv |

| Year: | 2018 |

| Download: | ADS | arXiv |

Abstract

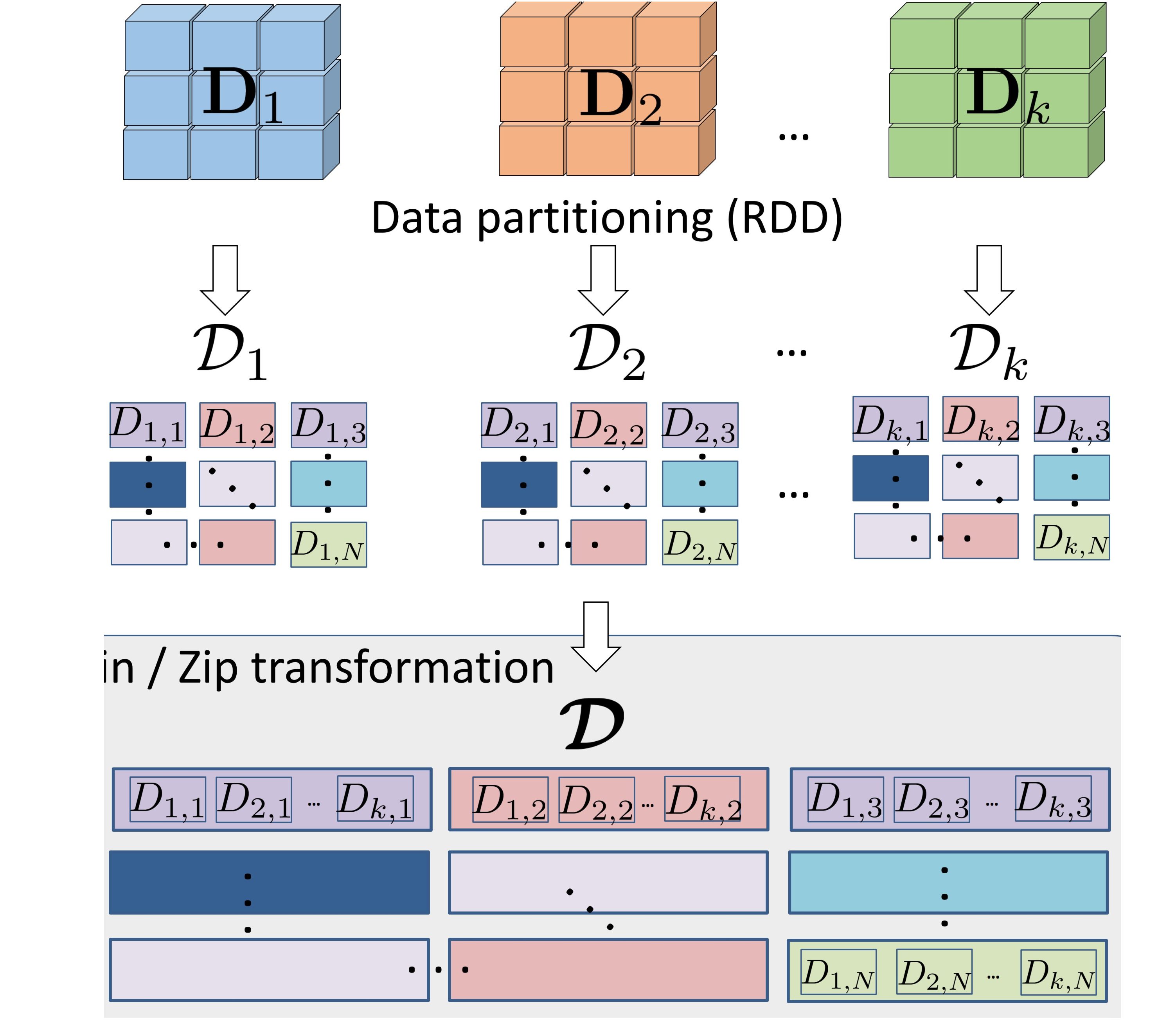

Current trends in scientific imaging are challenged by the emerging need of integrating sophisticated machine learning with Big Data analytics platforms. This work proposes an in-memory distributed learning architecture for enabling sophisticated learning and optimization techniques on scientific imaging problems, which are characterized by the combination of variant information from different origins. We apply the resulting, Spark-compliant, architecture on two emerging use cases from the scientific imaging domain, namely: (a) the space variant deconvolution of galaxy imaging surveys (astrophysics), (b) the super-resolution based on coupled dictionary training (remote sensing). We conduct evaluation studies considering relevant datasets, and the results report at least 60\% improvement in time response against the conventional computing solutions. Ultimately, the offered discussion provides useful practical insights on the impact of key Spark tuning parameters on the speedup achieved, and the memory/disk footprint.